Most of my illustrious career has been spent working or consulting for Fortune 1000 companies. These companies are fundamentally dependent on their computer systems, particularly their online systems, to transact business.

If the systems are down, the business stops running.

In fact, the systems don’t even have to be down to create havoc.

What if the response time is too slow? If you’ve ever done user testing with people whose job it is to enter money-making financial transactions for large corporations, you may have been amazed, as I was, at how fast they are.

Obviously then, the software you build for them has to be even faster; split-second response time is required. If your software is slowing people down, the business is losing money.

Or what if people are sitting around staring at their monitors because they can’t figure out how that great new interface you gave them is supposed to work?

Bad news.

Again there’s a measurable loss of revenue. Because these people are not supposed to be staring at their monitors, they’re supposed to be entering those money-making financial transactions, remember?

I could go on with this, but I think we both get the point: As a technologist working with these companies, you’re held to an exacting standard, because the cost of failure is high.

For example . . .

One evening many years ago, I put a software upgrade into production for a client, a major electronics distributor.

It was a pretty straightforward upgrade and we tested it, but I guess we didn’t test it diligently enough on certain boundary cases, because when I came in the next morning, I was informed that our “upgrade” had crashed, preventing the online system from coming up for an hour until it could be backed out and order was restored.

In other words, we had effectively put the company out of business for an hour, a really expensive mistake. I was further informed that the CIO wished to talk with us in his office once he was finished getting his ass kicked by executive management.

Well, my dick was limp, I’ll tell you.

I took a moment to divide the company’s annual revenue by the number of business hours in a year. According to my calculations, this fiasco had cost about $250,000.

I popped another Xanax and washed it down with a pot of coffee to keep from passing out. I was twitching like a chicken for hours.

Yes, and thanks to experiences like that, I now consider myself a seasoned developer. I try to anticipate the consequences of technical decisions early in a project in an effort to avoid downstream catastrophes.

But I don’t work on mission-critical applications now.

I work on Web applications.

And with different kinds of applications come different kinds of developers.

Most Web developers have worked exclusively on systems where the cost of failure is very low, so they rarely ponder the implications of technical decisions in great detail.

Why bother? What’s the worst thing that could happen?

Well, the Web site could have unpredictable access times, it could scale poorly, users could be unable to navigate the interface.

But so what?

As I write this, people still expect Web sites to have unpredictable access times, to scale poorly, and to have confusing interfaces.

Developers aren’t penalized for this; it’s all factored into the equation, as though improving the situation is beyond human capacity.

He who pays the piper is calling for a low-quality tune.

Okay, part of the problem is that most clients either don’t know any better or aren’t willing to pay for better.

And, you might say, why should they when users are still more than willing to forgive them for mediocrity?

But here’s the real problem:

Very few Web developers have had the edification that comes from blowing away a quarter of a million dollars of someone else’s money in an hour, not to mention the resulting shitrain that descends over the land.

Because if they had, they’d be a little more careful next time.

Massive accountability

Here’s a fun story about the benefits of really holding people accountable for shoddy workmanship.

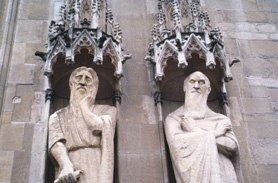

In The Innocents Abroad, Mark Twain wrote about King Xerxes, who in the 5th Century BC ordered a bridge of boats to be built across the Hellespont:

A moderate gale destroyed the flimsy structure, and the King, thinking that to publicly rebuke the contractors might have a good effect on the next set, called them out before the army and had them beheaded. In the next ten minutes he let a new contract for the bridge. It has been observed by ancient writers that the second bridge was a very good bridge.

Res ipsa loquitor.

Thus spoke The Programmer.